Capturing Evidence from AWS

We previously discussed how to upload evidence into our AWS environment for analysis. This is something which clearly has benefits due to the ease of spinning up very high spec systems in a matter of minutes. In saying this, there are also inhibiting factors such as bandwidth availability, cost and legal issues which may arise. I don’t wish to cover too many of the technical elements, as there are mountains of information on Amazon’s site. We shall now cover some scenarios where we may need to capture evidence from AWS.

In any AWS evidence collection you are likely to require:

1: Appropriate access to the platform (if it’s not your own), including credentials and audit logging for your access.

2: Analysis systems (scope AWS specifications prior to starting) with a full suite of tools in the AWS environment or your clients.

3: A thorough understanding of the infrastructure, evidence sources, time frames and objectives.

4: Evidence naming convention to apply as tags to volumes or snapshots, which can be used for continuity, chain of custody, and later identifying your evidence volumes.

5: For larger environments, consideration on what you will do with any volumes, snapshots or AMI’s once your collection/analysis phase is complete. eg download or deletion

(At scale, this approach of image preservation will cost a number of bit-schmeckles. An exit strategy which allows for maximum data integrity, evidence continuity and cost-effectiveness will pre-empt any difficult cost implications or conversations for you further down the track)

Sharing Evidence between AWS Accounts

There may be a few reasons why you would want to share a particular snapshot from your target environment. The target environment may be compromised, they may not wish to have you on-site for a number of reasons or you may just want to share snapshots to your own as this is where you have all your beefy instances and tools configured.

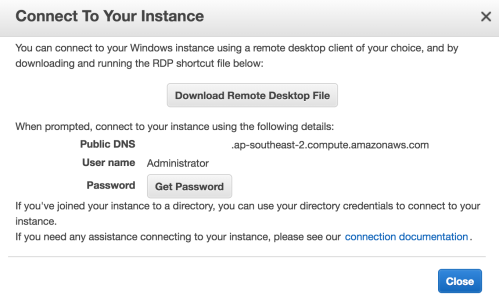

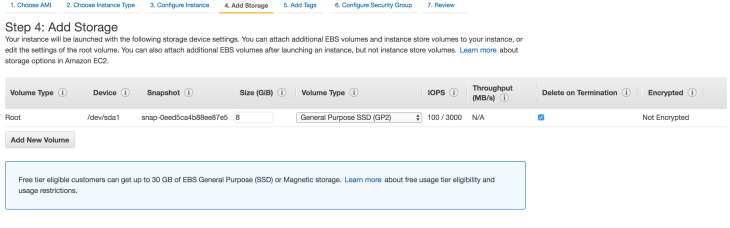

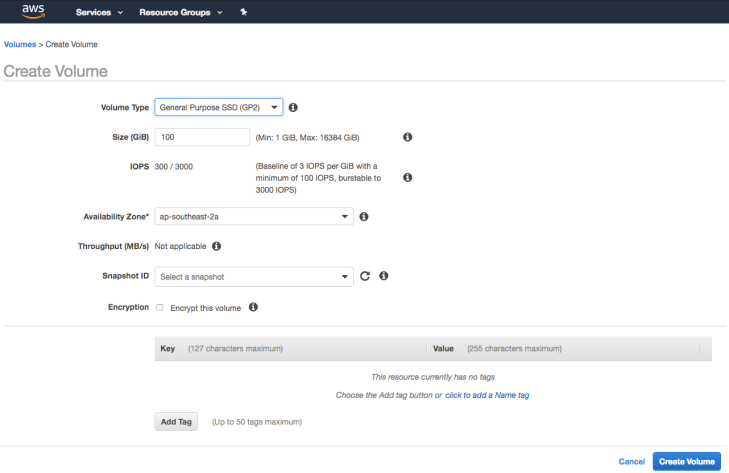

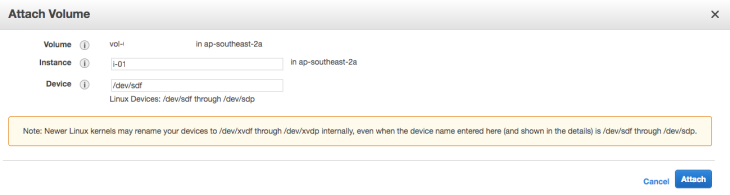

To share a snapshot from your target environment you will need to establish which AMI or Snapshot you wish to acquire, create a volume from this and then share the volume to your own platform.

I won’t discuss hashing but it should be self-explanatory that you need to establish a level of certainty about your evidence, particularly when physically moving data through data centres or downloading from the AWS platform.

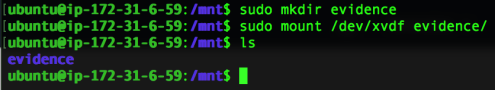

Once you have the volume moved to your own platform you will need to mount it read-only where you can proceed to interrogate it with forensic tools as you normally would.

You may wish to capture a forensic image of this volume at this time for continuity or for download and offline analysis. Depending on your case requirements and time constraints, you may also wish to interact with it directly in order to reach quick results.

Provided you have taken appropriate measures to capture your snapshots and volumes, interacting directly with the write blocked volume in this way is an acceptable approach for performing Incident Response (in my humble opinion).

That’s how I approached sharing a volume from the target environment to my own for imaging and analysis.

Analysis in your target environment

The other scenario you may face is where your client hosts data in regions which are outside of your legal jurisdiction or have non-disclosure agreements or other contractual obligations which will impact on your ability to physically take evidence away from their environment.

This can drastically impact on your ability to stick to the old dead box approach to capturing evidence where you capture the forensic image and download it for offline analysis. I recently worked on a case where no client data (stored in AWS) was to be accessed or taken outside of the client’s offices.

The approach devised involved setting up analysis workstations in multiple regions all over the world to mount volumes from systems which we suspected to be involved in the incident. We had to access these systems from within our client’s offices and through the process, only collect evidence which contained no client data. Essentially, this led to the bulk of our incident response investigation revolving around event logs, registry hives and file system metadata in order to meet these requirements.

This approach is essentially the same as sharing volumes to your own instance, although there are other implications:

1: Hardware dongles – You may wish to use hardware licensed software. We used Virtualhere to share ours up to AWS and this worked exceptionally well, although there were some firewall and routing issues.

2: IT assistance – Like all cases, administrators of the target infrastructure should be your best friends. Chances are you will need a lot of help from these guys when getting their environment configured to work for you.

3: Time – Depending on the amount of evidence you have to capture, time will definitely be against you. This is quite simply due to the way that AWS manages snapshots and volumes. You need to be very meticulous when creating volumes and documenting your actions.

4: Time – Because we never have enough of it…

So that’s a very high-level view of the approach I’ve used for capturing and analysing evidence in AWS.

Reference:

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/sharingamis-explicit.html